- #Text editor for mac won't crash with large data how to#

- #Text editor for mac won't crash with large data pro#

- #Text editor for mac won't crash with large data free#

To learn more about mysqlimport, it’s best to refer to the command on your system. If you need to use a table name that is different than the filename, you can create a symbolic link: If you created the table using Sequel Pro, this should be easy to accomplish. The table name should match the CSV name. Mysqlimport -ignore-lines=1 -fields-terminated-by=, -fields-optionally-enclosed-by='\"' -verbose -local -u -p I have enclosed data you must change in brackets (). Use the following command to import your CSV file. Once the new table is created, go ahead and delete the rows in the table in preparation for the import of the large version of the CSV. In our example case, the table name should be very_large_nov_2019. Make sure the table name matches the name of the original CSV file.

#Text editor for mac won't crash with large data pro#

Then, utilize the Sequel Pro import method described above.

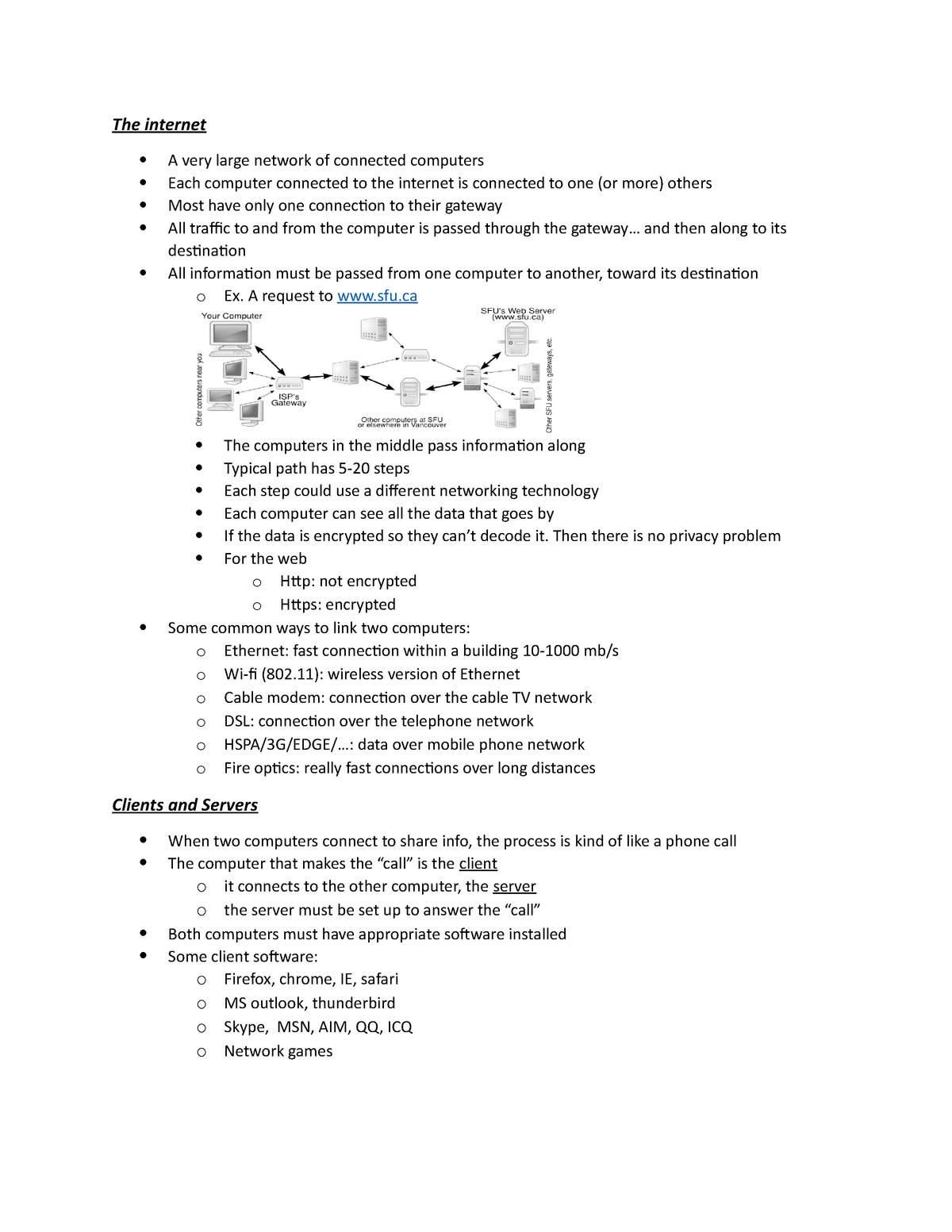

I prefer to use a text editor to open the large CSV and then copy and paste the first 10-20 lines into a new text editor window and saving that file with a. Additionally, you can do complex sorting, filtering, and transformation operations if you are proficient with SQL.įor the sake of this example, let’s assume we are working with a CSV file >1GB that is called very_large_nov_2019.csv.įirst, you must create a CSV file contain only the first 10-20 lines of your large CSV file, we will call it very_large_nov_2019_abridged.csv. MySQL Import Using Sequel Pro and the Command Line (Working Solution)įinally! I found a solution that works reliably for large CSVs. Specifically, my 1.32 GB CSV made Sequel Pro crash instantly. Just like the other methods, the program crashed or froze when sufficiently large files were attempted. This is especially important if later importing a much larger file into the same table. It’s best practice to name the table the same name as the file.Select the appropriate CSV files and make sure the import settings match your file’s needs.Select the database you want to import into (or create a new one) and then go to File -> Import….Here is a quick overview on how that works: It has a great CSV import feature because it will help generate a table based on the CSV automatically.

#Text editor for mac won't crash with large data free#

Sequel Pro is a free MySQL graphical management application for Macs. I had Sequel Pro installed so I decided to give this method an attempt. Many database management programs give you the ability to import a CSV file. Like I said earlier in the post, I desired a free solution so I kept researching. It’s a great solution if you don’t mind paying. The paid plans support up to 20 million rows. If your data is column-heavy, this may be a great solution.

They have a free plan that has a limit of 5 million rows. They built a web tool to solve this exact problem. I found a great SaaS solution for the problem, called CSV Explorer. This is based on my memory from several months ago, I may test to get some better data around when it starts to crash. However, it definitely started getting really slow and crashing with files greater than a hundred megabytes. I was hoping this would be my answer to manipulating large CSVs.

Google released a handy tool now called OpenRefine that enables lots of handy data manipulation operations. Additionally, Excel’s file size limit appears to be 2 gigabytes, so it won’t work well for anything larger than that. Typically, opening large CSVs is a relatively rare occurrence unless your a data analyst, so I tried to find a free solution that would work well. Excel for Mac performed well but it is a paid solution so I did not consider it viable for many developers. Google Sheets worked for some files but would tend to crash or hang past a certain file size. It has a hard row limit that seemed to be independent of file size. If you would like to skip the story and go straight to the best free solution, please click here. I’m using a 2018 Macbook Pro with a 2.6 GHz 6-core i7 and 16 GB of RAM. In this post, I’ve outlined the different ways I’ve tried to manipulate large CSVs and their results. A good text editor can open these large CSVs but you lose spreadsheet capabilities like rearranging columns and filtering data.

#Text editor for mac won't crash with large data how to#

I set out to figure out how to open and manipulate these files in a free and somewhat accessible way that was fast and didn’t risk crashes.Īs a software engineer with a history of supporting marketing teams, I often encounter extremely large datasets including customer segment exports or analytics event logs that can be larger than a gigabyte. Have you ever struggled to open and manipulate a large CSV file on a Mac? 100MB? 1 gigabyte or greater? Extremely large CSVs bring most spreadsheet utilities to a halt or the computer. How To Open & Manipulate Large (>100MB) CSV Files On A Mac

0 kommentar(er)

0 kommentar(er)